Machine learning, a dynamic subset of artificial intelligence, is the science of enabling computers to learn and improve from experience without being explicitly programmed. At its core, machine learning involves the development of algorithms that can analyze vast amounts of data, identify patterns, and make predictions or decisions.

There are three primary paradigms of machine learning: supervised learning, where the algorithm is trained on a labeled dataset; unsupervised learning, where the algorithm explores unlabeled data to discover hidden patterns; and reinforcement learning, where the algorithm learns through trial and error, receiving rewards or penalties for its actions. These powerful techniques are the driving force behind many of the technologies we use daily, from the image recognition that tags our photos to the natural language processing that allows us to communicate with our devices.

Supervised learning represents the most common approach to machine learning, where algorithms are trained on datasets that include both input data and the correct outputs. This approach is particularly effective for tasks where historical data is available and the relationship between inputs and outputs can be clearly defined. Applications range from email spam detection, where the algorithm learns to distinguish between legitimate and unwanted messages, to medical diagnosis, where systems can identify patterns in medical images that indicate specific conditions.

Unsupervised learning approaches are used when datasets lack labeled examples, requiring algorithms to discover patterns and structures on their own. Clustering algorithms, a common unsupervised learning technique, can group similar data points together, revealing hidden relationships in complex datasets. This approach is particularly valuable for exploratory data analysis, customer segmentation, and anomaly detection. The challenge with unsupervised learning lies in evaluating the quality of results, as there are no correct answers to compare against.

Reinforcement learning mimics the way humans and animals learn through interaction with an environment, receiving feedback in the form of rewards or penalties for actions taken. This approach has shown remarkable success in game playing, where systems have achieved superhuman performance in complex games like Go and chess. The application of reinforcement learning to real-world problems, such as autonomous vehicle navigation and resource management, presents both opportunities and challenges due to the complexity and unpredictability of real environments.

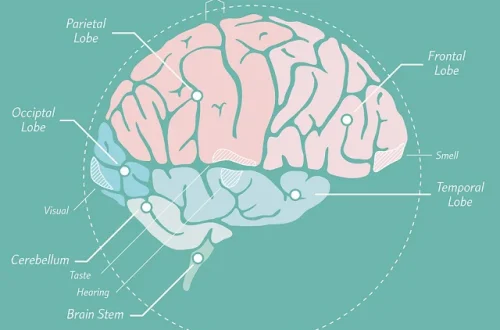

Deep learning, a subset of machine learning that uses neural networks with multiple layers, has revolutionized many areas of artificial intelligence. These networks, inspired by the structure of the human brain, can learn complex representations of data that enable sophisticated capabilities in image and speech recognition. The success of deep learning has been driven by the availability of large datasets and powerful computing hardware, making it possible to train networks with millions of parameters on vast amounts of data.

The development of machine learning models requires careful attention to data quality and preprocessing, as the performance of these systems is heavily dependent on the data used for training. Issues such as bias in training data can lead to unfair or inaccurate results, highlighting the importance of diverse and representative datasets. Data preprocessing techniques, including normalization, feature selection, and augmentation, play crucial roles in preparing data for effective model training.

Model evaluation and validation are critical steps in the machine learning pipeline, ensuring that systems perform well on new, unseen data. Techniques such as cross-validation, where models are tested on multiple subsets of data, help to assess generalization performance. The challenge lies in creating evaluation frameworks that accurately reflect real-world conditions and account for potential changes in data distributions over time.

As the volume and complexity of data continue to grow, the importance of machine learning in extracting valuable insights and driving intelligent action will only intensify, making it an essential tool for innovation across all sectors. The development of automated machine learning (AutoML) tools is making these capabilities more accessible to non-experts, enabling a broader range of professionals to leverage machine learning in their work. The integration of machine learning with other technologies, such as edge computing and the Internet of Things, is creating new opportunities for real-time decision making and intelligent automation.

The future of machine learning will likely see continued advances in areas such as few-shot learning, where systems can learn effectively from limited examples, and transfer learning, where knowledge gained in one domain can be applied to another. The development of more interpretable models will help to address concerns about the “black box” nature of many machine learning systems, making them more trustworthy and accountable. The integration of machine learning with other forms of artificial intelligence, such as symbolic reasoning, may lead to more robust and versatile intelligent systems.